Microsoft Exposes Utilization Of AI Tools By State-Backed Hackers

State-backed hackers from Russia, China, and Iran have been leveraging tools developed by Microsoft-backed OpenAI to enhance their cyber espionage capabilities.

State-backed hackers from Russia, China, and Iran have been leveraging tools developed by Microsoft-backed OpenAI to enhance their cyber espionage capabilities.

hacking groups affiliated with entities such as Russian military intelligence, Iran's Revolutionary Guard, and Chinese and North Korean governments had been utilizing large language models, a form of artificial intelligence, to refine their hacking techniques. The models utilize extensive text data to generate responses that closely resemble human language.

In response to the findings, Microsoft has imposed a blanket ban on state-backed hacking groups from accessing its AI products, regardless of any legal or terms of service violations. According to Tom Burt, Microsoft's Vice President for Customer Security, the company aims to prevent threat actors from exploiting this technology for malicious purposes.

While Russian, North Korean, and Iranian diplomatic officials have not yet commented on the allegations, China's US embassy spokesperson Liu Pengyu rejected the accusations.

Microsoft further detailed various ways in which such hacking groups utilized large language models, including research on military technologies, spear-phishing campaigns, and crafting convincing emails to deceive targets.

Earlier this year, Microsoft warned that Russia, Iran, and China are likely to plan to influence the upcoming elections in the United States and other countries in 2024. Microsoft's Threat Analysis Center also confirmed that Iran has intensified its cyberattacks and influence operations since 2020.

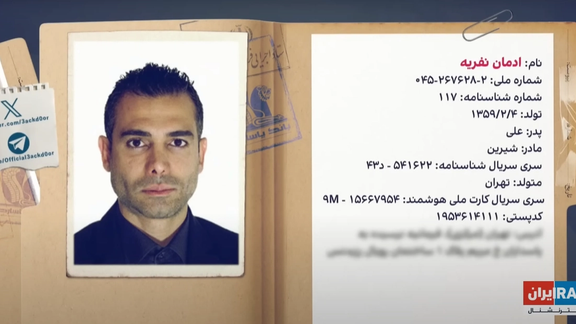

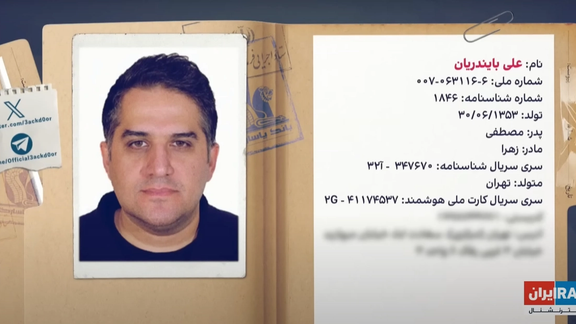

Iran International has obtained information about two oil smugglers, helping to circumvent US sanctions, affiliated with top officials close to Iran's Supreme Leader Ali Khamenei.

The two, Edman Nafrieh (Adman Nafariyeh) and Ali Bayandarian, collaborated with Parsargad Bank to sell millions of dollars' worth of Iranian oil illegally, a common practice for the Islamic Republic meant to circumvent the US sanctions on its oil industry and banking sector.

The operation allegedly took place under the auspices of the Headquarters of Imam's Directive (Executive Headquarters of Imam's Order or simply Setad), a parastatal organization under direct control of the Supreme Leader. A committee known as the "Cover Committee," which includes key figures of Iran’s Supreme National Security Council purportedly issued credit lines to intermediaries for bypassing sanctions.

The Islamic Republic has numerous mechanisms in place through a wide range of entities and organizations to sell its oil via third parties to evade sanctions. The country has also started giving oil to state organizations, including the IRGC, as a way to boost their budgets without actually allocating any money to them. Such operations are usually carried out by a network of state bodies and businesspeople with close ties to the regime, who usually gain huge profits in the process. However, with every new administration in office, former assets become a liability and bear the brunt of the political games of the regime.

Edman Nafrieh, 43, who lives in an upmarket part of Tehran, is one of such businesspeople who were trusted by the Setad to sell Iranian oil. His real name, Arman, was changed to Adman in 2007 and later to Adrian, as he gradually whitewashed his Iranian origins. Finally in January last year, he changed his last name to Touran to complete his more Western cover image. Through a network of companies active in the field of energy in Tehran and Dubai, Nafrieh is still active in illegal sales of Iran’s oil as well as bitumen industry.

In one case in 2019, two senior figures of the cover committee – Yahya Alavi and Mohammad Mirmohammadi -- requested Parsargad Bank to issue a $500 million bank guarantee in the name of Nafrieh to sell the oil, which was under the control of the Setad. Apparently, he never paid back about $300 million of the oil sale proceeds.

He was designated by the US in November 2022 along with five other people and 17 entities in a crackdown on a sanctions evasion network that provided support to Iran’s proxy in Lebanon Hezbollah and the Islamic Revolutionary Guard Corps-Qods Force (IRGC-QF).

Ali Bayandarian, another member of the Setad’s oil smuggling network, was also sanctioned by the US in January 2020 as Washington designated four companies accused of purchasing Iranian oil and petrochemical products. Bayandarian was designated over his links to the companies.

Documents leaked by a cyberattack on the Iranian parliament’s media arm on Tuesday revealed the parliament's coordination with designated Iranian entities and individuals to facilitate their trade activities and conceal their identities and connections from international regulatory bodies.

Nafrieh and Bayandarian are just small cogs in Iran’s huge network in charge of keeping the flow of oil revenues around global sanctions.

Iran’s oil exports have been increasing in recent years, from a low of less than 500,000 barrels per day after the US re-imposed sanctions on Iran in 2019 to as high as over 1,500,000, a rise that regime officials attribute to measures to bypass the punitive measures rather than an honest interaction with the world.

One of the most well-known cases was former tycoon Babak Zanjani, who now faces execution for embezzling the proceeds of oil sales totaling around $3.5 billion. Zanjani was arrested and convicted in 2013 after Hassan Rouhani was elected president, but has always maintained his innocence and that the death sentence passed was "politically motivated."

Zanjani sold Iranian oil on behalf of the NIOC during President Mahmoud Ahmadinejad's second term (2009-13) through an elaborate network of black-market dealers and money-launderers − particularly in the UAE, Turkey and Malaysia. He was subsequently sanctioned by the Council of the European Union in December 2012 and by the United States Treasury in April 2013.

In the wake of US retaliatory attacks on Iran's proxy militias, the Commander-in-Chief of Iran's Islamic Revolutionary Guard Corps (IRGC) issued a renewed warning against the US getting into direct conflict with Tehran.

While the US has made it clear it prefers diplomatic dialogue and has strategically hit proxy sites in Yemen, Iraq and Syria, Hossein Salami spoke in fighting terms Wednesday, warning, "We have always fired the last shot and emerged victorious in the field."

President Joe Biden's stance on conflict with Iran has been consistently lenient throughout his term, particularly in light of the October 7th Hamas attack on Israel which triggered a proxy war across the region.

Iran's militias have launched over 180 attacks on US facilities and killed multiple personnel as retaliation for Biden's support for Israel's right to defend itself after the atrocities which saw 1,200 mostly civilians murdered and over 250 taken hostage. Biden has reacted with less than a dozen retaliatory strikes.

In response to a drone strike which killed three US personnel in Jordan, President Biden authorized a "multi-layered" strike on targets associated with Iran's Islamic Revolutionary Guard Corps (IRGC) in Iraq and Syria.

Joint US-UK strikes on Houthi targets have also hit key sites for the militia which has been blockading the Red Sea trade route since November and launched multiple attacks on international shipping in a bid to force Israel into a ceasefire in its war on Iran-backed Hamas in Gaza.

Following a cyber attack on over 600 Iranian government servers, the parliament's voting system broke down during proceedings on Wednesday, MPs resorting to standing and sitting to signify their agreement or disagreement.

The live broadcast of the parliamentary session was also unavailable on the official website due to the malfunctioning of the voting mechanism following Tuesday's cyber attack claimed by the hacktivist group Uprising till Overthrow, closely linked with the Albania-based opposition Mujahideen-e Khalq (MEK) organization.

It is the latest in a series of high level hacking incidents affecting the government and comes on the eve of the country's upcoming elections, scheduled for March 1st, which have witnessed extensive candidate disqualifications and experts expect turnout to be less than 15 percent, a record low for the regime.

Shahriar Heidari, a member of the National Security Commission of the Parliament, highlighted the vulnerability of the country's cyber security infrastructure, saying, "Given that some government systems have been hacked before, and now the parliament has been hacked, it indicates the weakness of the country's cyber security structure."

Leaked documents from the breach include sensitive materials concerning the Supreme National Security Council's strategies to evade sanctions and internal parliamentary documents, such as the list of MPs' salaries which revealed parliamentarians' salaries range from 1.7 to 2.7 billion rials, equivalent to $3200 to $5000.

Meanwhile, Iranian workers are set to receive a government approved average salary increase of 20 percent starting in March, amid an annual inflation rate of around 50 percent. The new minimum monthly wage has been set at 115 million Iranian rials or about 210 US dollars.

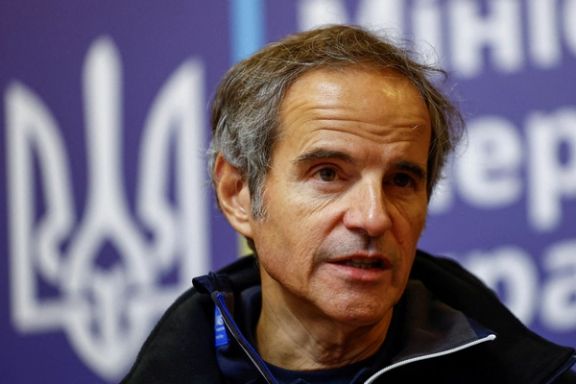

Iran's IRGC is constructing a new expansion of the Bushehr power plant, revealed just one day after the United Nations' nuclear chief, Rafael Grossi, said Iran was "not entirely transparent" on its nuclear program.

The governor of Bushehr, Ahmad Mohammadi-Zadeh, referring to the regime's armed forces, said "domestic experts are overseeing the construction of the two nuclear power plants, each with a capacity of 1080 megawatts of electricity production.”

Grossi’s comments came in response to statements made by Iran’s former nuclear energy organization chief Ali Akbar Salehi, who was also the country's foreign minister from 2010-2013.

In a televised interview when asked if Iran has achieved the capability of developing a nuclear bomb, avoiding a direct answer Salehi stated, "We have [crossed] all the thresholds of nuclear science and technology." He said Iran is “presenting a face which is not entirely transparent when it comes to its nuclear activities”.

Iran's nuclear program has long been a source of contention in the international community, with concerns about its potential military dimensions as it continues to enrich beyond international limits.

The IRGC's involvement in Iran's nuclear activities has been a subject of scrutiny and suspicion.

While the exact nature and extent of its involvement is not always clear due to the secretive nature of Iran's nuclear program, there have been allegations of the IRGC's involvement in nuclear research, procurement of technology, and possibly even aspects of weaponization.

Several nations including the UK, Australia and the US, have strong political lobbies calling for the designation of the IRGC as a terror group following its brutal suppression of protesters following the Women, Life, Freedom uprising of 2022, and its murder plots on foreign soil uncovered as far afield as Europe to South America.

Ruling hardliners in Iran are growing increasingly apprehensive about the possibility of a Republican victory in US presidential elections, leading to a tougher stance towards Tehran.

Among these hardliners, there's notable concern regarding the prospect of Donald Trump making a political comeback. Trump's withdrawal from the 2015 Joint Comprehensive Plan of Action (JCPOA) shattered the hopes of both Iranian hardliners and moderates, who had envisioned reaping benefits from the nuclear deal with the West in exchange for curbing their nuclear ambitions, while expanding their conventional capabilities and regional influence.

Since then, although the Biden Administration has been too kind to Tehran and often turned a blind eye in the face of mischiefs by Tehran, politicians in Tehran still believe that Biden could have done more than giving billions of dollars to Iran in return for releasing US hostages and releasing Iran's frozen assets in South Korea, Iraq and elsewhere.

Supreme Leader Ali Khamenei and his hardline loyalists have little reason to be alarmed by the prospect of President Joe Biden taking tougher action, as they succeeded in convincing his administration to release billions of dollars. They have been led to believe that they can influence the current administration by occasional sabre rattling or by threatening a nuclear escalation, which would be stopped by more concessions from the United States.

Earlier in this week, former Foreign Minister Ali Akbar Salehi implied that Iran has already surpassed the nuclear threshold.

However, the Iranian Parliament's research center warned the country's top officials including Khamenei about the bleak economic implications of Trump's victory in US elections.

Meanwhile, after a eulogist harshly criticized Iran's moderates and particularly former President Hasan Rouhani for spending too much time and resources to revive the 2015 JCPOA nuclear deal, reformist activist Reza Nasri reminded hardliners that if they had allowed the former government to revive the JCPOA during Biden’s presidency, most of its restrictions would have disappeared in due course.

Nasri further cautioned the ruling hardliners that within a year, Iran's nuclear dossier would be revisited at the UNSC, posing a challenging scenario should Donald Trump or Nikki Haley win the US presidential election. While Trump's foreign policy largely revolved around relations with Ukraine and Russia, Haley has consistently adopted a tough stance on Tehran and its leading clerical figures.

Highlighting the potential repercussions, Nasri warned that Iran's UNSC dossier could adversely impact three generations of Iranians.

Conversely, the Majles Research Center highlighted the concerning trend of Iran's diminishing foreign currency reserves over recent years, likening it to a form of economic disarmament. Warning of potential repercussions, the Center cautioned that a return of Trump to the White House could result in more sanctions, and this will be a shock to the financial markets in Iran. Furthermore, Trump has promised to make a deal with Russia and to focus on harnessing China. In this case, China is likely to reduce oil purchase from Iran and this will further shrink Iran's foreign currency reserve.

Furthermore, the Research Center pointed out another implication of Trump's potential return to the White House: challenges in Iran's economic partnership with Russia. Babak Negahdari, Chairman of the Majles Research Center, underscored the current decline in Iran's economy, evident from the situation of industries in the stock market, and emphasized the looming challenges ahead.

For instance, he highlighted a projected $3.7 billion deficit for importing essential commodities, which would require tapping into foreign currency reserves or the Central Bank. Negahdari warned of the likelihood of increased sanctions against Iran and the resultant shock to financial markets under a Trump presidency, suggesting that despite the Biden Administration's tenure, Iran's economic crisis may persist. He cited Iran's growing reliance on the UAE for marine logistics as a potential threat to food security and financial stability, indicating the pressing challenges facing Iran's economic landscape.